Line-delimited JSON with Jackson

(aka Streaming Read/Write of Long JSON data streams)

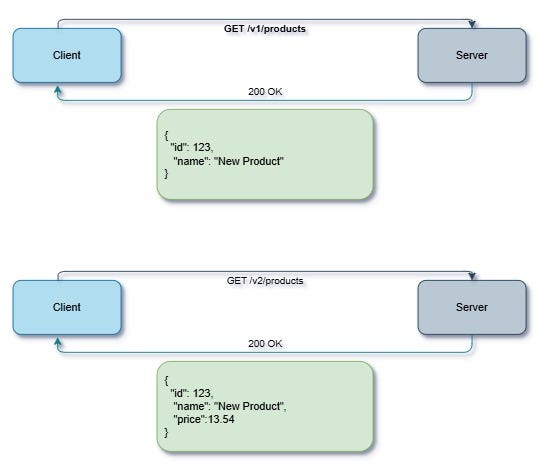

JSON specification defines “JSON Values” as just “[JSON] Objects and Arrays” (*), and most JSON usage examples focus on that: for example, how to read an HTTP Request where payload is a JSON Object, how to write matching HTTP Response where payload is similarly a JSON Object.

There are countless articles covering such usage: with Jackson you would usually use something like this:

final JsonMapper mapper = new JsonMapper();

MyRequest reqOb = mapper.readValue(httpRequest.getInputStream(), MyRequest.class);

// or: JsonNode reqAsTree = mapper.readTree(...)

// ... processing, and then

MyResponse respOb = new MyResponse(.....);

mapper.writeValue(httpRequest.getOutputStream(), respOb);or maybe the actual usage would be by a framework like Spring Boot and mostly hidden.

But this is not the only kind of JSON content that is in use.

(*) although JSON specification does not consider Scalar values (Strings, Boolean, Numbers) as JSON values, most libraries and frameworks do.

Streaming Large Data Sets as a Sequence of Values

While single-value input and output is sufficient for request/response patterns (as well as many other use cases) there are cases where a slightly different abstraction is useful: a sequence of values.

The most common representation is so-called “line-delimited JSON”:

{"id":1, "value":137 }

{"id":2, "value":256 }

{"id":3, "value":-89 }At first it may seem odd to consider such a non-standard (wrt JSON specification) representation — doesn’t JSON specifically have Array values? — but there are reasons why this representation can be better than use of root-level wrapper Array:

- Many/most frameworks fully read root-level values completely, and would try to read JSON Array fully in-memory preventing incremental processing (and using a lot of memory as well)

- It is not possible to split entries without full JSON parsing, if content is JSON array — but when using separator like linefeed (and NOT using pretty-printing) it is simple to split values by separator, without further decoding

- When writing content stream there is no need to keep track of whether separating comma is needed, nor closing “]” marker; values can be simply appended at the end (possibly by concurrent producers)

For this reason, there is a simple technique known as “Line-Delimited JSON” — one of couple of related techniques for JSON Streaming — in which you can simply append JSON values when writing, and conversely read a sequence on the other end.

Jackson fully supports both “Line-Delimited” JSON and related “Concatenated JSON”. Let’s look at how.

Reading Line-Delimited JSON with Jackson

Using our earlier simple data example, we can create a MappingIterator and use that for reading:

final JsonMapper mapper = ...;

final File input = new File("data.ldjson"); // or Writer, OutputStream

try (MappingIterator<JsonNode> it = mapper.readerFor(JsonNode.class)

.readValues(input)) {

while (it.hasNextValue()) {

JsonNode node = it.nextValue();

int id = node.path("id").intValue(); // or "asInt()" to coerce

int value = node.path("value").intValue();

// ... do something with it

}and that’s pretty much it. Alternatively we could of course use a POJO:

static class Value {

public int id;

public int value;

}try (MappingIterator<Value> it = mapper.readerFor(Value.class)

.readValues(input)) {

while (it.hasNextValue()) {

Value v = it.nextValue();

int id = v.id;

int value = v.value;

// ... process

}

}

with the same pattern.

Note: code would be identical for “Concatenated JSON” use case: Jackson requires white space separators between other tokens, but not between root-level structural separators ({, }, [, ]) (within JSON content regular rules apply).

Writing Line-Delimited JSON with Jackson

How about producing line-delimited JSON? Quite similar: the abstraction to use is SequenceWriter (instead of MappingIterator) and so:

final File outputFile = new File("data.ldjson");

try (SequenceWriter seq = mapper.writer()

.withRootValueSeparator("\n") // Important! Default value separator is single space

.writeValues(outputFile)) {

IdValue value = ...;

seq.write(value);

}and here about the only noteworthy thing is to specify linefeed as the entry separator (you can use an alternative separator as well). If you use Jackson for reading and writing, the default single-separator works fine, but other systems likely prefer linefeeds.

Note, too, that you can configure settings like SerializationFeature, StreamWriteFeature and JsonWriteFeature when constructing ObjectWriter.

Bonus: Error Recovery with Line-Delimited JSON

Another useful feature with streaming reads is that it is possible to catch and gracefully handle some of deserialization issues. For example:

final int MAX_ERRORS = 5;

int line = 0;

int failCount = 0;

try (MappingIterator<Value> it = mapper.readerFor(Value.class)

.readValues(input)) {

while (it.hasNextValue()) {

++line;

Value v;

try {

v = it.nextValue();

// process normally

} catch (JsonMappingException e) {

++failCount;

System.err.println("Problem ("+failCount+") on line "+line+": "+e.getMessage());

if (failCount == MAX_ERRORS) {

System.err.println("Too many errors: aborting processing");

break;

}

}

}

}Note that this technique allows catching some of the issues — for example, if you get a String when expecting number, or unexpected property — but not everything: most notably, invalid JSON content is often non-recoverable.

This is part of the reason why it is good to keep track of number of errors to stop processing after certain number of failures. Similarly, if trying to recover, it is important to validate resulting entries against business logic.

Nonetheless it is sometimes useful to try to recover processing: if for nothing else, to get more information on context: you may try to simply read one entry after failure to give more accurate contextual information.

Convenience methods: Read It All

Although MappingIterator fundamentally exposes iterator-style interface, there are convenience methods to use if you really just want to read all available entries:

try (MappingIterator<Value> it = mapper.readerFor(Value.class)) {

List<Value> all = it.readAll()

}Similarly, SequenceWriter has convenience methods for writing contents of Collection s:

SequenceWriter seq = ...;

List<IdValue> values = ...seq.writeAll(valuesToWrite);

which iterates over values of given Collection and writes them, one-by-one, to the streaming output.

Line-Delimited [INSERT FORMAT] with Jackson?

As you may have noticed, the API exposed is not JSON-specific at all and could work on any and all other formats as well. Whether it does work (for reading and/or writing) does depend on format in question. At least following Jackson backends should work:

- CSV (

jackson-dataformat-csv): reading and writing value sequences is actually quite natural - Smile (

jackson-dataformat-smile): since it has the same logical structure as JSON, will work exactly the same! - CBOR (

jackson-dataformat-cbor): similar to Smile, should work as well, as per “CBOR Sequences” (RFC-8742), but I need to verify (see jackson-dataformats-binary#238) - YAML (

jackson-dataformat-yaml): single physical file or stream may contain multiple documents so the same approach should work as well - Avro (

jackson-dataformat-avro): should also “just” work and has been tested to some degree

For others there may be a way to use this API (Protobuf, Ion), but more work would be needed. For yet others (XML, Properties) it may not make sense at all, due to format limitations.

I plan on covering CSV use case very soon; and in fact Error Recovery functionality mentioned earlier was specifically improved to work well with CSV.